Coherency in Network Systems

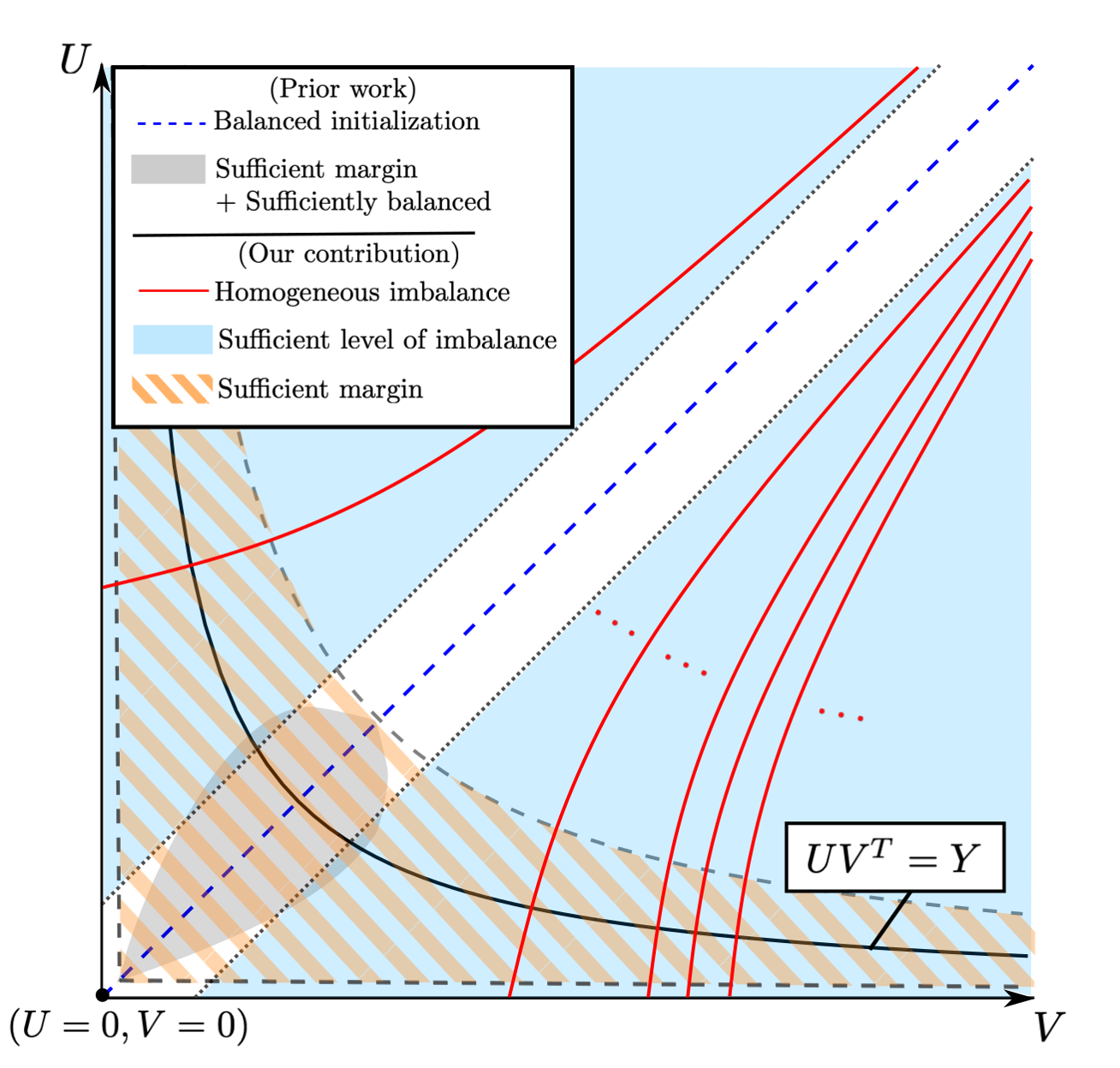

Coherence refers to the ability of a group of interconnected dynamical nodes to respond similarly when subject to certain disturbances. Coherence is instrumental in understanding the collective behavior of large networks, including consensus networks, transportation networks, and power networks. While network coherence has been studied for almost half a century, the analysis focuses on simple first-/second-order node dynamics and often assumes the dynamics to be homogeneous or proportional. Moreover, when multiple coherent groups are present in the network, there has been little theoretical guidance on how to model the dynamic interaction among different groups.

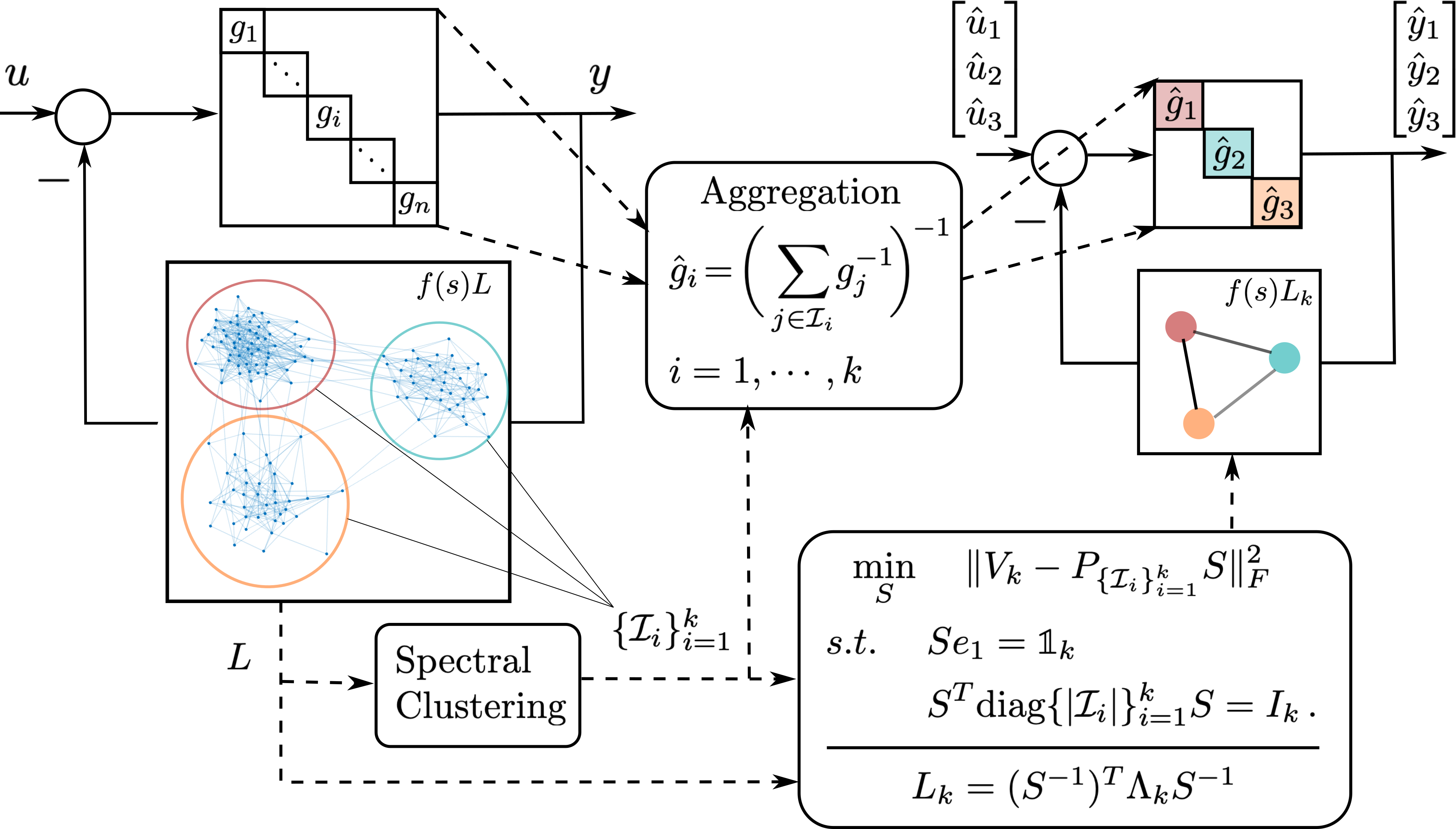

We developed a novel frequency-domain analysis to address these issues to understand network coherence. My work has shown that coherent behavior corresponds to the network transfer matrix being approximately low rank in the frequency domain, emerging as the network connectivity increases. Such an analysis encompasses heterogeneous node dynamics and leads to a theoretically justifiable aggregation model for a coherent group especially suitable for application to power networks. Moreover, we combine the coherence analysis with spectral clustering techniques to propose a structure-preserving reduction for large-scale networks with multiple weakly connected coherent subnetworks. The reduced network has each node representing one coherent group, and the reduced graph characterizes the interactions among different groups in the original network. Such an approach models the interaction among coherent groups highly interpretably and opens new avenues for scalable control designs that leverage the reduced network model.

Related publications:

- H. Min and E. Mallada, “Spectral clustering and model reduction for weakly-connected coherent network systems,” in American Control Conference (ACC), 2023, pp. 2957-2962. doi:10.23919/ACC55779.2023.10156212

[BibTeX] [Abstract] [Download PDF]

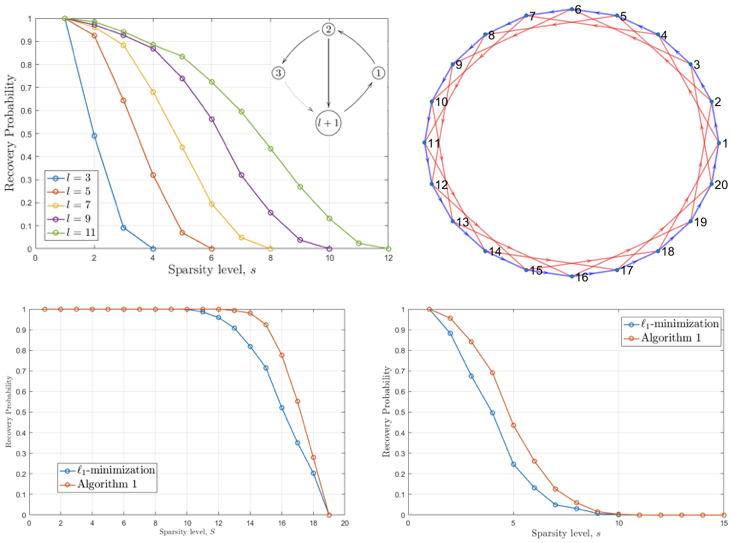

We propose a novel model-reduction methodology for large-scale dynamic networks with tightly-connected components. First, the coherent groups are identified by a spectral clustering algorithm on the graph Laplacian matrix that models the network feedback. Then, a reduced network is built, where each node represents the aggregate dynamics of each coherent group, and the reduced network captures the dynamic coupling between the groups. Our approach is theoretically justified under a random graph setting. Finally, numerical experiments align with and validate our theoretical findings.

@inproceedings{mm2023acc, abstract = {We propose a novel model-reduction methodology for large-scale dynamic networks with tightly-connected components. First, the coherent groups are identified by a spectral clustering algorithm on the graph Laplacian matrix that models the network feedback. Then, a reduced network is built, where each node represents the aggregate dynamics of each coherent group, and the reduced network captures the dynamic coupling between the groups. Our approach is theoretically justified under a random graph setting. Finally, numerical experiments align with and validate our theoretical findings.}, author = {Min, Hancheng and Mallada, Enrique}, bdsk-url-3 = {https://doi.org/10.23919/ACC55779.2023.10156212}, booktitle = {American Control Conference (ACC)}, doi = {10.23919/ACC55779.2023.10156212}, grants = {CAREER-1752362, CPS-2136324}, month = {6}, pages = {2957-2962}, record = {presented Jun 2023, accepted Jan 2023, submitted Sep 2022}, title = {Spectral clustering and model reduction for weakly-connected coherent network systems}, url = {https://mallada.ece.jhu.edu/pubs/2023-ACC-MM.pdf}, year = {2023} } - H. Min and E. Mallada, “Learning Coherent Clusters in Weakly-Connected Network Systems,” in Proceedings of The 5th Annual Learning for Dynamics and Control Conference, 2023, pp. 1167-1179.

[BibTeX] [Abstract] [Download PDF]

We propose a structure-preserving model-reduction methodology for large-scale dynamic networks with tightly-connected components. First, the coherent groups are identified by a spectral clustering algorithm on the graph Laplacian matrix that models the network feedback. Then, a reduced network is built, where each node represents the aggregate dynamics of each coherent group, and the reduced network captures the dynamic coupling between the groups. We provide an upper bound on the approximation error when the network graph is randomly generated from a weight stochastic block model. Finally, numerical experiments align with and validate our theoretical findings.

@inproceedings{mm2023l4dc, abstract = {We propose a structure-preserving model-reduction methodology for large-scale dynamic networks with tightly-connected components. First, the coherent groups are identified by a spectral clustering algorithm on the graph Laplacian matrix that models the network feedback. Then, a reduced network is built, where each node represents the aggregate dynamics of each coherent group, and the reduced network captures the dynamic coupling between the groups. We provide an upper bound on the approximation error when the network graph is randomly generated from a weight stochastic block model. Finally, numerical experiments align with and validate our theoretical findings.}, author = {Min, Hancheng and Mallada, Enrique}, booktitle = {Proceedings of The 5th Annual Learning for Dynamics and Control Conference}, editor = {Matni, Nikolai and Morari, Manfred and Pappas, George J.}, grants = {CAREER-1752362, TRIPODS-1934979, CPS-2136324}, month = {6}, pages = {1167--1179}, publisher = {PMLR}, record = {presented, accepted Mar 2022, submitted Nov 2022}, series = {Proceedings of Machine Learning Research}, title = {Learning Coherent Clusters in Weakly-Connected Network Systems}, url = {https://mallada.ece.jhu.edu/pubs/2023-L4DC-MM.pdf}, volume = {211}, year = {2023} } - H. Min, F. Paganini, and E. Mallada, “Accurate Reduced Order Models for Coherent Heterogeneous Generators,” IEEE Control Systems Letters (L-CSS), vol. 5, iss. 5, pp. 1741-1746, 2021. doi:10.1109/LCSYS.2020.3043733

[BibTeX] [Abstract] [Download PDF]

We introduce a novel framework to approximate the aggregate frequency dynamics of coherent synchronous generators. By leveraging recent results on dynamics concentration of tightly connected networks, we develop a hierarchy of reduced order models –based on frequency weighted balanced truncation– that accurately approximate the aggregate system response. Our results outperform existing aggregation techniques and can be shown to monotonically improve the approximation as the hierarchy order increases.

@article{mpm2021lcss, abstract = {We introduce a novel framework to approximate the aggregate frequency dynamics of coherent synchronous generators. By leveraging recent results on dynamics concentration of tightly connected networks, we develop a hierarchy of reduced order models --based on frequency weighted balanced truncation-- that accurately approximate the aggregate system response. Our results outperform existing aggregation techniques and can be shown to monotonically improve the approximation as the hierarchy order increases.}, author = {Min, Hancheng and Paganini, Fernando and Mallada, Enrique}, bdsk-url-3 = {https://doi.org/10.1109/LCSYS.2020.3043733}, doi = {10.1109/LCSYS.2020.3043733}, grants = {CAREER-1752362, CPS-1544771, ENERGISE-DE-EE0008006, AMPS-1736448, TRIPODS-1934979, EPCN-1711188, ARO-W911NF-17-1-0092}, journal = {IEEE Control Systems Letters (L-CSS)}, month = {11}, note = {also in ACC 2021}, number = {5}, pages = {1741-1746}, record = {early accesss Nov 2020, accepted Nov 2020, revised Nov 2020, submitted Sep 2020}, title = {Accurate Reduced Order Models for Coherent Heterogeneous Generators}, url = {https://mallada.ece.jhu.edu/pubs/2021-LCSS-MPM.pdf}, volume = {5}, year = {2021} } - H. Min and E. Mallada, “Dynamics Concentration of Tightly-Connected Large-Scale Networks,” in 58th IEEE Conference on Decision and Control (CDC), 2019, pp. 758-763. doi:10.1109/CDC40024.2019.9029796

[BibTeX] [Abstract] [Download PDF]

The ability to achieve coordinated behavior –engineered or emergent– on networked systems has attracted widespread interest over several fields. This has led to remarkable advances on the development of a theoretical understanding of the conditions under which agents within a network can reach agreement (consensus) or develop coordinated behaviors such as synchronization. However, fewer advances have been made toward explaining another commonly observed phenomena in tightly-connected networks systems: output responses of nodes in the networks are almost identical to each other despite heterogeneity in their individual dynamics. In this paper, we leverage tools from high-dimensional probability to provide an initial answer to this phenomena. More precisely, we show that for linear networks of nodal random transfer functions, as the networks size and connectivity grows, every node in the network follows the same response to an input or disturbance — irrespectively of the source of this input. We term this behavior as dynamics concentration as it stems from the fact that the network transfer matrix uniformly converges in probability to a unique dynamic response –i.e., it concentrates– determined by the distribution of the random transfer function of each node. We further discuss the implications of our analysis in the context of model reduction and robustness, and provide numerical evidence that similar phenomena occur in small deterministic networks over a properly defined frequency band.

@inproceedings{mm2019cdc, abstract = {The ability to achieve coordinated behavior --engineered or emergent-- on networked systems has attracted widespread interest over several fields. This has led to remarkable advances on the development of a theoretical understanding of the conditions under which agents within a network can reach agreement (consensus) or develop coordinated behaviors such as synchronization. However, fewer advances have been made toward explaining another commonly observed phenomena in tightly-connected networks systems: output responses of nodes in the networks are almost identical to each other despite heterogeneity in their individual dynamics. In this paper, we leverage tools from high-dimensional probability to provide an initial answer to this phenomena. More precisely, we show that for linear networks of nodal random transfer functions, as the networks size and connectivity grows, every node in the network follows the same response to an input or disturbance -- irrespectively of the source of this input. We term this behavior as dynamics concentration as it stems from the fact that the network transfer matrix uniformly converges in probability to a unique dynamic response --i.e., it concentrates-- determined by the distribution of the random transfer function of each node. We further discuss the implications of our analysis in the context of model reduction and robustness, and provide numerical evidence that similar phenomena occur in small deterministic networks over a properly defined frequency band.}, author = {Min, Hancheng and Mallada, Enrique}, bdsk-url-3 = {https://doi.org/10.1109/CDC40024.2019.9029796}, booktitle = {58th IEEE Conference on Decision and Control (CDC)}, doi = {10.1109/CDC40024.2019.9029796}, grants = {ARO-W911NF-17-1-0092, CPS-1544771, EPCN-1711188, CAREER-1752362, AMPS-1736448, ENERGISE-DE-EE0008006}, month = {12}, pages = {758-763}, title = {Dynamics Concentration of Tightly-Connected Large-Scale Networks}, url = {https://mallada.ece.jhu.edu/pubs/2019-CDC-MM.pdf}, year = {2019} }

Scale-Free Analysis and Synthesis of Network Systems

Synchronous machines conventionally regulate the AC frequency in electrical power systems. The gradual replacement of these machines by asynchronous renewable-based generation, which provides little or no frequency control, increases system uncertainty and the risk of instability. This limits the proportion of renewables that can be integrated

into the system. In this work, we develop a framework for performing frequency control in power systems with arbitrary conventional and renewable generation mixes.

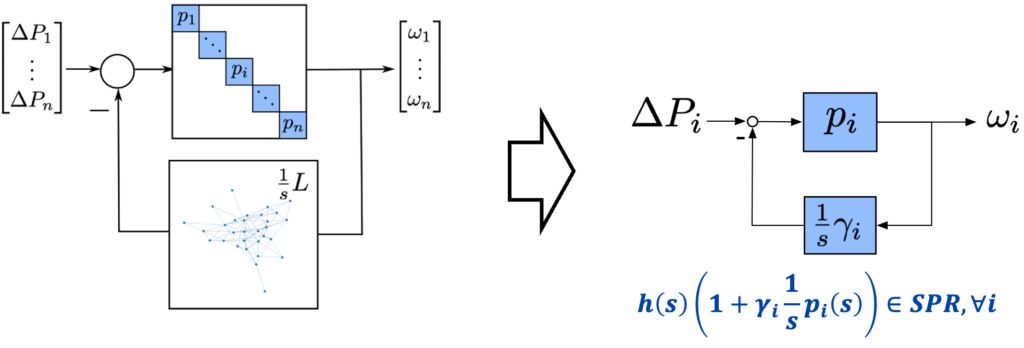

Our approach is based on a robust stability criterion that can be used to guarantee the stability of a full power system model based on a set of decentralized tests, one for each component in the system. It can be applied even when using detailed heterogeneous component models and can be verified using several standard frequency response, state-space, and circuit theoretic analysis tools. By designing decentralized controllers for individual components to meet these decentralized tests, strong apriori robust stability guarantees that hold independently of the operating point and remain valid even as components are added to and removed from the grid can be given. This allows every component to contribute to system frequency regulation simply and provably. Notably, our framework certifies the stability of several existing (non-passive) power system control schemes and models and allows for the study of robustness to delays.

Related publications:

- R. Pates and E. Mallada, “Robust Scale Free Synthesis for Frequency Regulation in Power Systems,” IEEE Transactions on Control of Network Systems, vol. 6, iss. 3, pp. 1174-1184, 2019. doi:10.1109/TCNS.2019.2922503

[BibTeX] [Abstract] [Download PDF]

This paper develops a framework for power system stability analysis, that allows for the decentralised design of frequency controllers. The method builds on a novel decentralised stability criterion, expressed as a positive real requirement, that depends only on the dynamics of the components at each individual bus, and the aggregate susceptance of the transmission lines connected to it. The criterion is both robust to network uncertainties as well as heterogeneous network components, and it can be verified using several standard frequency response, state space, and circuit theory analysis tools. Moreover, it allows to formulate a scale free synthesis problem, that depends on individual bus dynamics and leverages tools from Hinf optimal control. Notably, unlike similar passivity methods, our framework certifies the stability of several existing (non-passive) power system control schemes and allows to study robustness with respect to delays.

@article{pm2019tcns, abstract = {This paper develops a framework for power system stability analysis, that allows for the decentralised design of frequency controllers. The method builds on a novel decentralised stability criterion, expressed as a positive real requirement, that depends only on the dynamics of the components at each individual bus, and the aggregate susceptance of the transmission lines connected to it. The criterion is both robust to network uncertainties as well as heterogeneous network components, and it can be verified using several standard frequency response, state space, and circuit theory analysis tools. Moreover, it allows to formulate a scale free synthesis problem, that depends on individual bus dynamics and leverages tools from Hinf optimal control. Notably, unlike similar passivity methods, our framework certifies the stability of several existing (non-passive) power system control schemes and allows to study robustness with respect to delays.}, author = {Pates, Richard and Mallada, Enrique}, bdsk-url-3 = {https://mallada.ece.jhu.edu/pubs/2019-TCNS-PM.pdf}, bdsk-url-4 = {http://dx.doi.org/10.1109/TCNS.2019.2922503}, doi = {10.1109/TCNS.2019.2922503}, grants = {CPS:1544771, EPCN-1711188, AMPS-1736448, CAREER-1752362}, journal = {IEEE Transactions on Control of Network Systems}, keywords = {Network Control; Power Networks}, month = {9}, number = {3}, pages = {1174-1184}, title = {Robust Scale Free Synthesis for Frequency Regulation in Power Systems}, url = {https://mallada.ece.jhu.edu/pubs/2019-TCNS-PM.pdf}, volume = {6}, year = {2019} }

Fundamental Limits of Contact Tracing in Epidemic Control

“The practical, physical (and sometimes dangerous) consequences of control must be respected, and the underlying principles must be clearly and well taught.”

— Gunter Stein, Respect the Unstable

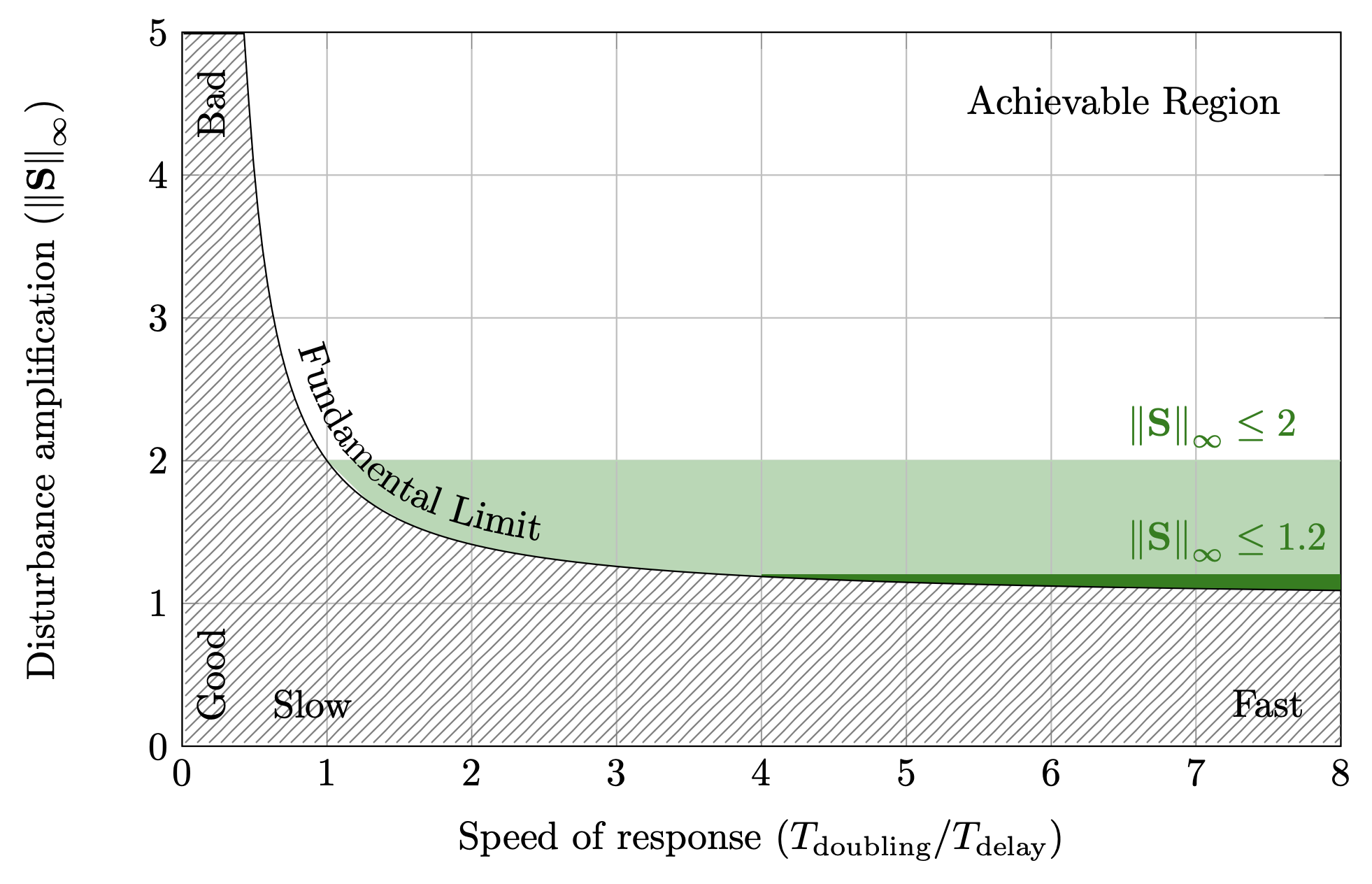

The control of disease spread is not the traditional hunting ground for control engineers, so a degree of caution from our community is perhaps of even greater relevance than normal. That said, controlling the spread of a disease has many of the elements of the most challenging control problems. Accurate models of the spread of a highly infectious disease are, at best, controversial but certainly unstable (at least in a population with high susceptibility to the disease). The mechanisms for identifying infectious members of the population may be subject to significant delays and inaccuracies, compromising the quality of the available information for performing feedback. And finally, the options for mitigating the spread can be blunt, unpredictable, and subject to severe capacity constraints.

Related publications:

- R. Pates, A. Ferragut, E. Pivo, P. You, F. Paganini, and E. Mallada, “Respect the Unstable: Delays and Saturation in Contact Tracing for Disease Control,” SIAM Journal on Control and Optimization, vol. 60, iss. 2, p. S196-S220, 2022. doi:https://doi.org/10.1137/20M1377825

[BibTeX] [Abstract] [Download PDF]

Motivated by the novel coronavirus disease (COVID-19) pandemic, this paper aims to apply Gunter Stein’s cautionary message of respecting the unstable to the problem of controlling the spread of an infectious disease. With this goal, we study the effect that delays and capacity constraints in the TeTrIs process have on preventing exponential disease spread. Our analysis highlights the critical importance of speed and scale in the TeTrIs process. Precisely, having a delay in the TeTrIs process smaller than the doubling time of the disease spread is necessary for achieving acceptable performance. Similarly, limited TeTrIs capacity introduces a threshold on the size of an outbreak beyond which the disease spreads almost like the uncontrolled case. Along the way, we provide numerical illustrations to highlight these points.

@article{pfpypm2022sicon, abstract = {Motivated by the novel coronavirus disease (COVID-19) pandemic, this paper aims to apply Gunter Stein's cautionary message of respecting the unstable to the problem of controlling the spread of an infectious disease. With this goal, we study the effect that delays and capacity constraints in the TeTrIs process have on preventing exponential disease spread. Our analysis highlights the critical importance of speed and scale in the TeTrIs process. Precisely, having a delay in the TeTrIs process smaller than the doubling time of the disease spread is necessary for achieving acceptable performance. Similarly, limited TeTrIs capacity introduces a threshold on the size of an outbreak beyond which the disease spreads almost like the uncontrolled case. Along the way, we provide numerical illustrations to highlight these points.}, author = {Pates, Richard and Ferragut, Andres and Pivo, Elijah and You, Pengcheng and Paganini, Fernando and Mallada, Enrique}, bdsk-url-3 = {https://doi.org/10.1137/20M1377825}, doi = {https://doi.org/10.1137/20M1377825}, grants = {CAREER-1752362, EPCN-1711188, AMPS-1736448, TRIPODS-1934979}, journal = {SIAM Journal on Control and Optimization}, month = {4}, number = {2}, pages = {S196-S220}, record = {accepted Dec 2021, revised Nov 2021, submitted Nov 2020}, title = {Respect the Unstable: Delays and Saturation in Contact Tracing for Disease Control}, url = {https://mallada.ece.jhu.edu/pubs/2022-SICON-PFPYPM.pdf}, volume = {60}, year = {2022} }

Synchronization of Coupled Oscillators

The study of synchronization of coupled oscillators has attracted the attention of widely diverse research disciplines ranging from biology and chemistry to engineering and physics. Since the seminal works of Winfree and Kuramoto, phase-coupled oscillators have served as a canonical synchronization model that can capture rich, dynamic behavior, including multiple equilibria, limit cycles, and even chaos. In this work, we study how the interaction type (coupling), network configuration (topology), communication latencies (delay), and oscillator types (frequency heterogeneity) affect the behavior of a population of identical oscillators.

Using this knowledge, we design a family of controllers that can guarantee phase consensus (common phase value) for arbitrary network topologies and heterogeneous natural frequencies of oscillation.

Related publications:

- A. Gushchin, E. Mallada, and A. Tang, “Phase-coupled oscillators with plastic coupling: Synchronization and stability,” IEEE Transactions on Network Science and Engineering, vol. 3, iss. 4, pp. 240-256, 2016. doi:10.1109/TNSE.2016.2605096

[BibTeX] [Abstract] [Download PDF]

In this article we study synchronization of systems of homogeneous phase-coupled oscillators with plastic coupling strengths and arbitrary underlying topology. The dynamics of the coupling strength between two oscillators is governed by the phase difference between these oscillators. We show that, under mild assumptions, such systems are gradient systems, and always achieve frequency synchronization. Furthermore, we provide sufficient stability and instability conditions that are based on results from algebraic graph theory. For a special case when underlying topology is a tree, we formulate a criterion (necessary and sufficient condition) of stability of equilibria. For both, tree and arbitrary topologies, we provide sufficient conditions for phase-locking, i.e. convergence to a stable equilibrium almost surely. We additionally find conditions when the system possesses a unique stable equilibrium, and thus, almost global stability follows. Several examples are used to demonstrate variety of equilibria the system has, their dependence on system’s parameters, and to illustrate differences in behavior of systems with constant and plastic coupling strengths.

@article{gmt2016tnse, abstract = {In this article we study synchronization of systems of homogeneous phase-coupled oscillators with plastic coupling strengths and arbitrary underlying topology. The dynamics of the coupling strength between two oscillators is governed by the phase difference between these oscillators. We show that, under mild assumptions, such systems are gradient systems, and always achieve frequency synchronization. Furthermore, we provide sufficient stability and instability conditions that are based on results from algebraic graph theory. For a special case when underlying topology is a tree, we formulate a criterion (necessary and sufficient condition) of stability of equilibria. For both, tree and arbitrary topologies, we provide sufficient conditions for phase-locking, i.e. convergence to a stable equilibrium almost surely. We additionally find conditions when the system possesses a unique stable equilibrium, and thus, almost global stability follows. Several examples are used to demonstrate variety of equilibria the system has, their dependence on system's parameters, and to illustrate differences in behavior of systems with constant and plastic coupling strengths.}, author = {Gushchin, Andrey and Mallada, Enrique and Tang, Ao}, doi = {10.1109/TNSE.2016.2605096}, journal = {IEEE Transactions on Network Science and Engineering}, keywords = {Synchronization}, month = {09}, number = {4}, pages = {240-256}, title = {Phase-coupled oscillators with plastic coupling: Synchronization and stability}, url = {https://mallada.ece.jhu.edu/pubs/2016-TNSE-GMT.pdf}, volume = {3}, year = {2016} } - E. Mallada, R. A. Freeman, and A. Tang, “Distributed synchronization of heterogeneous oscillators on networks with arbitrary topology,” IEEE Transactions on Control of Network Systems, vol. 3, iss. 1, pp. 12-23, 2016. doi:10.1109/TCNS.2015.2428371

[BibTeX] [Abstract] [Download PDF]

Many network applications rely on the synchronization of coupled oscillators. For example, such synchronization can provide networked devices with a common temporal reference necessary for coordinating actions or decoding transmitted messages. In this paper, we study the problem of using distributed control to achieve both phase and frequency synchronization of a network of coupled heterogeneous nonlinear oscillators. Not only do our controllers guarantee zero phase error in steady state under arbitrary frequency heterogeneity, but they also require little knowledge of the oscillator nonlinearities and network topology. Furthermore, we provide a global convergence analysis, in the absence of noise and propagation delay, for the resulting nonlinear system whose phase vector evolves on the n-torus.

@article{mft2016tcns, abstract = {Many network applications rely on the synchronization of coupled oscillators. For example, such synchronization can provide networked devices with a common temporal reference necessary for coordinating actions or decoding transmitted messages. In this paper, we study the problem of using distributed control to achieve both phase and frequency synchronization of a network of coupled heterogeneous nonlinear oscillators. Not only do our controllers guarantee zero phase error in steady state under arbitrary frequency heterogeneity, but they also require little knowledge of the oscillator nonlinearities and network topology. Furthermore, we provide a global convergence analysis, in the absence of noise and propagation delay, for the resulting nonlinear system whose phase vector evolves on the n-torus.}, author = {Mallada, Enrique and Freeman, Randy A and Tang, Ao}, doi = {10.1109/TCNS.2015.2428371}, journal = {IEEE Transactions on Control of Network Systems}, keywords = {Coupled Oscillators; Synchronization}, month = {3}, number = {1}, pages = {12-23}, title = {Distributed synchronization of heterogeneous oscillators on networks with arbitrary topology}, url = {https://mallada.ece.jhu.edu/pubs/2016-TCNS-MFT.pdf}, volume = {3}, year = {2016} } - E. Mallada and A. Tang, “Synchronization of weakly coupled oscillators: coupling, delay and topology,” Journal of Physics A: Mathematical and Theoretical, vol. 46, iss. 50, p. 505101, 2013. doi:10.1088/1751-8113/46/50/505101

[BibTeX] [Abstract] [Download PDF]

There are three key factors in a system of coupled oscillators that characterize the interaction between them: coupling (how to affect), delay (when to affect) and topology (whom to affect). The existing work on each of these factors has mainly focused on special cases. With new angles and tools, this paper makes progress in relaxing some assumptions on these factors. There are three main results in this paper. Firstly, by using results from algebraic graph theory, a sufficient condition is obtained that can be used to check equilibrium stability. This condition works for arbitrary topology, generalizing existing results and also leading to a sufficient condition on the coupling function which guarantees that the system will reach synchronization. Secondly, it is known that identical oscillators with sin () coupling functions are guaranteed to synchronize in phase on a complete graph. Our results prove that in many cases certain structures such as symmetry and concavity, rather than the exact shape of the coupling function, are the keys for global synchronization. Finally, the effect of heterogenous delays is investigated. Using mean field theory, a system of delayed coupled oscillators is approximated by a non-delayed one whose coupling depends on the delay distribution. This shows how the stability properties of the system depend on the delay distribution and allows us to predict its behavior. In particular, we show that for sin () coupling, heterogeneous delays are equivalent to homogeneous delays. Furthermore, we can use our novel sufficient instability condition to show that heterogeneity, i.e. wider delay distribution, can help reach in-phase synchronization.

@article{mt2013jopa, abstract = {There are three key factors in a system of coupled oscillators that characterize the interaction between them: coupling (how to affect), delay (when to affect) and topology (whom to affect). The existing work on each of these factors has mainly focused on special cases. With new angles and tools, this paper makes progress in relaxing some assumptions on these factors. There are three main results in this paper. Firstly, by using results from algebraic graph theory, a sufficient condition is obtained that can be used to check equilibrium stability. This condition works for arbitrary topology, generalizing existing results and also leading to a sufficient condition on the coupling function which guarantees that the system will reach synchronization. Secondly, it is known that identical oscillators with sin () coupling functions are guaranteed to synchronize in phase on a complete graph. Our results prove that in many cases certain structures such as symmetry and concavity, rather than the exact shape of the coupling function, are the keys for global synchronization. Finally, the effect of heterogenous delays is investigated. Using mean field theory, a system of delayed coupled oscillators is approximated by a non-delayed one whose coupling depends on the delay distribution. This shows how the stability properties of the system depend on the delay distribution and allows us to predict its behavior. In particular, we show that for sin () coupling, heterogeneous delays are equivalent to homogeneous delays. Furthermore, we can use our novel sufficient instability condition to show that heterogeneity, i.e. wider delay distribution, can help reach in-phase synchronization.}, author = {Mallada, Enrique and Tang, Ao}, doi = {10.1088/1751-8113/46/50/505101}, journal = {Journal of Physics A: Mathematical and Theoretical}, keywords = {Coupled Oscillators; Synchronization}, month = {12}, number = {50}, pages = {505101}, title = {Synchronization of weakly coupled oscillators: coupling, delay and topology}, url = {https://mallada.ece.jhu.edu/pubs/2013-JOPA-MT.pdf}, volume = {46}, year = {2013} }

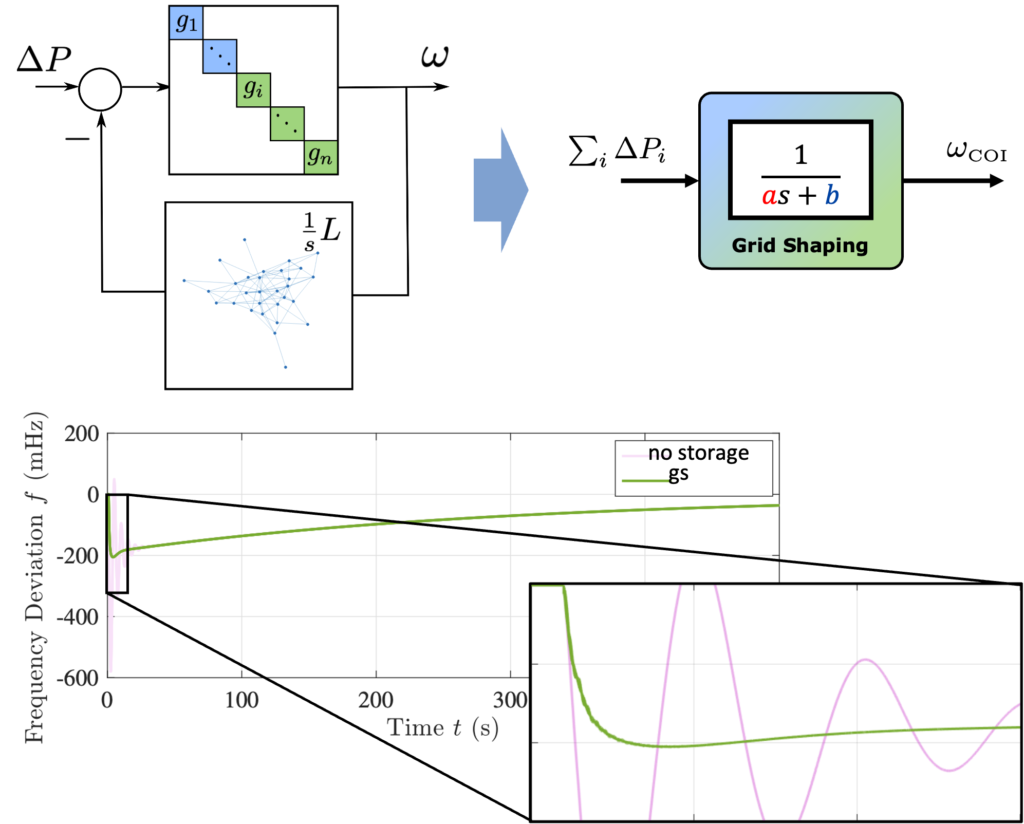

Grid Shaping Control for High-IBR Grids

The transition of power systems from conventional synchronous generation towards renewable energy sources -with little or no inertia- is gradually threatening classical methods for achieving grid synchronization. A widely embraced approach to mitigate this problem is to mimic inertial response using grid-connected inverters. That is, to introduce virtual inertia to restore the stiffness that the system used to enjoy. In this work, we seek to challenge this approach. We advocate taking advantage of the system’s low inertia to restore grid synchronism without incurring excessive control efforts. To this end, we develop an analysis and design framework for inverter-based frequency control. First, we develop novel stability analysis tools for power systems, which allow for the decentralized design of inverter-based controllers. The method requires that each inverter satisfies a standard H-infinity design requirement that depends on the dynamics of the components and inverters at each bus and the aggregate susceptance of the transmission lines connected to it. It is robust to network and delay uncertainty and, when no network information is available, reduces to the standard passivity condition for stability. Then, we propose a novel grid-forming control strategy, so-called grid shaping control, that aims to shape the frequency response of synchronous generators (SGs) to load perturbations so as to efficiently arrest sudden frequency drops. The approach builds on novel analysis tools that can characterize the Center of Inertia (CoI) response of a system with both IBRs and SGs and use this characterization to reshape it.

Related publications:

- B. K. Poolla, Y. Lin, A. Bernstein, E. Mallada, and D. Groß, “Frequency shaping control for weakly-coupled grid-forming IBRs,” IEEE Control Systems Letters (L-CSS), pp. 937-942, 2022. doi:10.1109/LCSYS.2022.3228855

[BibTeX] [Abstract] [Download PDF]

We introduce a novel framework to approximate the aggregate frequency dynamics of coherent synchronous generators. By leveraging recent results on dynamics concentration of tightly connected networks, we develop a hierarchy of reduced order models –based on frequency weighted balanced truncation– that accurately approximate the aggregate system response. Our results outperform existing aggregation techniques and can be shown to monotonically improve the approximation as the hierarchy order increases.

@article{plbmg2023lcss, abstract = {We introduce a novel framework to approximate the aggregate frequency dynamics of coherent synchronous generators. By leveraging recent results on dynamics concentration of tightly connected networks, we develop a hierarchy of reduced order models --based on frequency weighted balanced truncation-- that accurately approximate the aggregate system response. Our results outperform existing aggregation techniques and can be shown to monotonically improve the approximation as the hierarchy order increases.}, author = {Poolla, Bala Kameshwar and Lin, Yashen and Bernstein, Andrey and Mallada, Enrique and Groß, Dominic}, bdsk-url-3 = {https://doi.org/10.1109/LCSYS.2022.3228855}, doi = {10.1109/LCSYS.2022.3228855}, grants = {CAREER-1752362, CPS-2136324}, journal = {IEEE Control Systems Letters (L-CSS)}, month = {12}, pages = {937-942}, record = {published, online Dec 2022, accepted Nov 2022, submitted Sep 2022.}, title = {Frequency shaping control for weakly-coupled grid-forming IBRs}, url = {https://mallada.ece.jhu.edu/pubs/2022-LCSS-PLBMG.pdf}, year = {2022} } - Y. Jiang, A. Bernstein, P. Vorobev, and E. Mallada, “Grid-forming frequency shaping control in low inertia power systems,” IEEE Control Systems Letters (L-CSS), vol. 5, iss. 6, pp. 1988-1993, 2021. doi:10.1109/LCSYS.2020.3044551

[BibTeX] [Abstract] [Download PDF]

We introduce a novel framework to approximate the aggregate frequency dynamics of coherent synchronous generators. By leveraging recent results on dynamics concentration of tightly connected networks, we develop a hierarchy of reduced order models –based on frequency weighted balanced truncation– that accurately approximate the aggregate system response. Our results outperform existing aggregation techniques and can be shown to monotonically improve the approximation as the hierarchy order increases.

@article{jbvm2021lcss, abstract = {We introduce a novel framework to approximate the aggregate frequency dynamics of coherent synchronous generators. By leveraging recent results on dynamics concentration of tightly connected networks, we develop a hierarchy of reduced order models --based on frequency weighted balanced truncation-- that accurately approximate the aggregate system response. Our results outperform existing aggregation techniques and can be shown to monotonically improve the approximation as the hierarchy order increases.}, author = {Jiang, Yan and Bernstein, Andrey and Vorobev, Petr and Mallada, Enrique}, bdsk-url-3 = {https://doi.org/10.1109/LCSYS.2020.3044551}, doi = {10.1109/LCSYS.2020.3044551}, grants = {CAREER-1752362, AMPS-1736448, TRIPODS-1934979, EPCN-1711188, CPS-2136324}, journal = {IEEE Control Systems Letters (L-CSS)}, month = {12}, note = {also in ACC 2021}, number = {6}, pages = {1988-1993}, record = {early access Dec 2020, accepted Nov 2020, revised Nov 2020, submitted Sep 2020}, title = {Grid-forming frequency shaping control in low inertia power systems}, url = {https://mallada.ece.jhu.edu/pubs/2021-LCSS-JBVM.pdf}, volume = {5}, year = {2021} } - Y. Jiang, E. Cohn, P. Vorobev, and E. Mallada, “Storage-Based Frequency Shaping Control,” IEEE Transactions on Power Systems, vol. 36, iss. 6, pp. 5006-5019, 2021. doi:10.1109/TPWRS.2021.3072833

[BibTeX] [Abstract] [Download PDF]

With the decrease in system inertia, frequency security becomes an issue for power systems around the world. Energy storage systems (ESS), due to their excellent ramping capabilities, are considered as a natural choice for the improvement of frequency response following major contingencies. In this manuscript, we propose a new strategy for energy storage — frequency shaping control — that allows to completely eliminate the frequency Nadir, one of the main issue in frequency security, and at the same time tune the rate of change of frequency (RoCoF) to a desired value. With Nadir eliminated, the frequency security assessment can be performed via simple algebraic calculations, as opposed to dynamic simulations for conventional control strategies. Moreover, our proposed control is also very efficient in terms of the requirements on storage peak power, requiring up to 40% less power than conventional virtual inertia approach for the same performance.

@article{jcvm2021tps, abstract = {With the decrease in system inertia, frequency security becomes an issue for power systems around the world. Energy storage systems (ESS), due to their excellent ramping capabilities, are considered as a natural choice for the improvement of frequency response following major contingencies. In this manuscript, we propose a new strategy for energy storage -- frequency shaping control -- that allows to completely eliminate the frequency Nadir, one of the main issue in frequency security, and at the same time tune the rate of change of frequency (RoCoF) to a desired value. With Nadir eliminated, the frequency security assessment can be performed via simple algebraic calculations, as opposed to dynamic simulations for conventional control strategies. Moreover, our proposed control is also very efficient in terms of the requirements on storage peak power, requiring up to 40% less power than conventional virtual inertia approach for the same performance.}, author = {Jiang, Yan and Cohn, Eliza and Vorobev, Petr and Mallada, Enrique}, bdsk-url-3 = {https://doi.org/10.1109/TPWRS.2021.3072833}, doi = {10.1109/TPWRS.2021.3072833}, grants = {CAREER-1752362;CPS-2136324}, journal = {IEEE Transactions on Power Systems}, month = {11}, number = {6}, pages = {5006-5019}, record = {early access Apr 2021, accepted Mar 2021, revised Oct 2020, submitted May 2020}, title = {Storage-Based Frequency Shaping Control}, url = {https://mallada.ece.jhu.edu/pubs/2021-TPS-JCVM.pdf}, volume = {36}, year = {2021} } - Y. Jiang, R. Pates, and E. Mallada, “Dynamic Droop Control in Low Inertia Power Systems,” IEEE Transactions on Automatic Control, vol. 66, iss. 8, pp. 3518-3533, 2021. doi:10.1109/TAC.2020.3034198

[BibTeX] [Abstract] [Download PDF]

A widely embraced approach to mitigate the dynamic degradation in low-inertia power systems is to mimic generation response using grid-connected inverters to restore the grid’s stiffness. In this paper, we seek to challenge this approach and advocate for a principled design based on a systematic analysis of the performance trade-offs of inverterbased frequency control. With this aim, we perform a qualitative and quantitative study comparing the effect of conventional control strategies –droop control (DC) and virtual inertia (VI)– on several performance metrics induced by L2 and L∞ signal norms. By extending a recently proposed modal decomposition method, we capture the effect of step and stochastic power disturbances, and frequency measurement noise, on the overall transient and steady-state behavior of the system. Our analysis unveils several limitations of these solutions, such as the inability of DC to improve dynamic frequency response without increasing steady-state control effort, or the large frequency variance that VI introduces in the presence of measurement noise. We further propose a novel dynam-i-c Droop controller (iDroop) that overcomes the limitations of DC and VI. More precisely, we show that iDroop can be tuned to achieve high noise rejection, fast system-wide synchronization, or frequency overshoot (Nadir) elimination without affecting the steady-state control effort share, and propose a tuning recommendation that strikes a balance among these objectives. Extensive numerical experimentation shows that the proposed tuning is effective even when our proportionality assumptions are not valid, and that the particular tuning used for Nadir elimination strikes a good trade-off among various performance metrics.

@article{jpm2021tac, abstract = {A widely embraced approach to mitigate the dynamic degradation in low-inertia power systems is to mimic generation response using grid-connected inverters to restore the grid's stiffness. In this paper, we seek to challenge this approach and advocate for a principled design based on a systematic analysis of the performance trade-offs of inverterbased frequency control. With this aim, we perform a qualitative and quantitative study comparing the effect of conventional control strategies --droop control (DC) and virtual inertia (VI)-- on several performance metrics induced by L2 and L∞ signal norms. By extending a recently proposed modal decomposition method, we capture the effect of step and stochastic power disturbances, and frequency measurement noise, on the overall transient and steady-state behavior of the system. Our analysis unveils several limitations of these solutions, such as the inability of DC to improve dynamic frequency response without increasing steady-state control effort, or the large frequency variance that VI introduces in the presence of measurement noise. We further propose a novel dynam-i-c Droop controller (iDroop) that overcomes the limitations of DC and VI. More precisely, we show that iDroop can be tuned to achieve high noise rejection, fast system-wide synchronization, or frequency overshoot (Nadir) elimination without affecting the steady-state control effort share, and propose a tuning recommendation that strikes a balance among these objectives. Extensive numerical experimentation shows that the proposed tuning is effective even when our proportionality assumptions are not valid, and that the particular tuning used for Nadir elimination strikes a good trade-off among various performance metrics.}, author = {Jiang, Yan and Pates, Richard and Mallada, Enrique}, bdsk-url-3 = {https://doi.org/10.1109/TAC.2020.3034198}, doi = {10.1109/TAC.2020.3034198}, grants = {ENERGISE-DE-EE0008006, EPCN-1711188,AMPS-1736448, CPS-1544771, CAREER-1752362, AMPS-1736448, ARO-W911NF-17-1-0092}, journal = {IEEE Transactions on Automatic Control}, month = {8}, number = {8}, pages = {3518-3533}, record = {available online Nov. 2020, accepted Aug. 2020, revised Mar. 2020, submitted Aug. 2019}, title = {Dynamic Droop Control in Low Inertia Power Systems}, url = {https://mallada.ece.jhu.edu/pubs/2021-TAC-JPM.pdf}, volume = {66}, year = {2021} } - R. Pates and E. Mallada, “Robust Scale Free Synthesis for Frequency Regulation in Power Systems,” IEEE Transactions on Control of Network Systems, vol. 6, iss. 3, pp. 1174-1184, 2019. doi:10.1109/TCNS.2019.2922503

[BibTeX] [Abstract] [Download PDF]

This paper develops a framework for power system stability analysis, that allows for the decentralised design of frequency controllers. The method builds on a novel decentralised stability criterion, expressed as a positive real requirement, that depends only on the dynamics of the components at each individual bus, and the aggregate susceptance of the transmission lines connected to it. The criterion is both robust to network uncertainties as well as heterogeneous network components, and it can be verified using several standard frequency response, state space, and circuit theory analysis tools. Moreover, it allows to formulate a scale free synthesis problem, that depends on individual bus dynamics and leverages tools from Hinf optimal control. Notably, unlike similar passivity methods, our framework certifies the stability of several existing (non-passive) power system control schemes and allows to study robustness with respect to delays.

@article{pm2019tcns, abstract = {This paper develops a framework for power system stability analysis, that allows for the decentralised design of frequency controllers. The method builds on a novel decentralised stability criterion, expressed as a positive real requirement, that depends only on the dynamics of the components at each individual bus, and the aggregate susceptance of the transmission lines connected to it. The criterion is both robust to network uncertainties as well as heterogeneous network components, and it can be verified using several standard frequency response, state space, and circuit theory analysis tools. Moreover, it allows to formulate a scale free synthesis problem, that depends on individual bus dynamics and leverages tools from Hinf optimal control. Notably, unlike similar passivity methods, our framework certifies the stability of several existing (non-passive) power system control schemes and allows to study robustness with respect to delays.}, author = {Pates, Richard and Mallada, Enrique}, bdsk-url-3 = {https://mallada.ece.jhu.edu/pubs/2019-TCNS-PM.pdf}, bdsk-url-4 = {http://dx.doi.org/10.1109/TCNS.2019.2922503}, doi = {10.1109/TCNS.2019.2922503}, grants = {CPS:1544771, EPCN-1711188, AMPS-1736448, CAREER-1752362}, journal = {IEEE Transactions on Control of Network Systems}, keywords = {Network Control; Power Networks}, month = {9}, number = {3}, pages = {1174-1184}, title = {Robust Scale Free Synthesis for Frequency Regulation in Power Systems}, url = {https://mallada.ece.jhu.edu/pubs/2019-TCNS-PM.pdf}, volume = {6}, year = {2019} }

Storage Degradation Aware Market Design

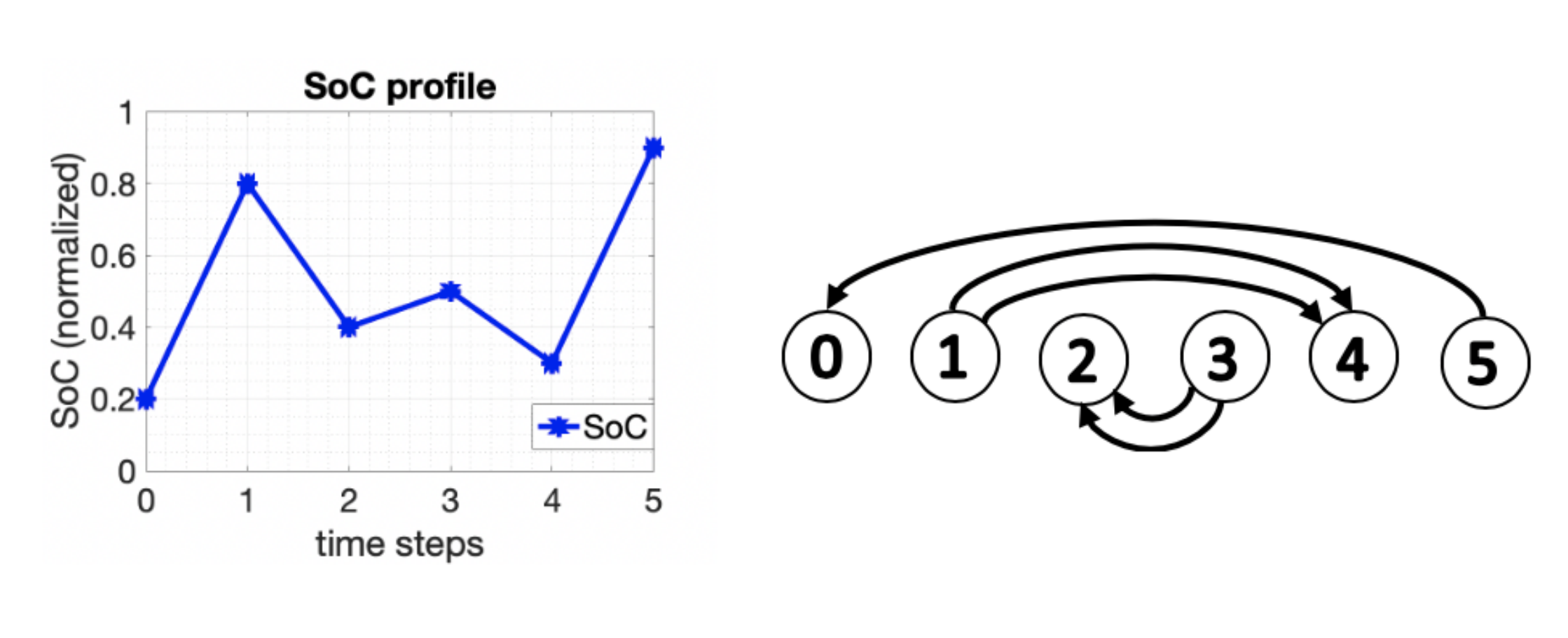

Lithium-ion-based battery storage stands out as one of the rapidly expanding storage solutions for the power grid. Nevertheless, unlike traditional generators, evaluating the cost of dispatching storage goes beyond a straightforward quantification in terms of supplied power alone. Notably, the operational cost associated with battery storage is influenced significantly by degradation resulting from numerous charging and discharging half-cycles. Unfortunately, these storage-specific costs are presently overlooked in market settlements, adversely impacting the overall profitability of storage systems. Predominantly, widely used models for storage cost employ a cycle-based degradation approach that assesses the cost of each half-cycle (charging or discharging) based on its depth. These models are commonly integrated with the Rainflow cycle counting algorithm, which extracts charging and discharging half-cycles from a storage State of Charge (SoC) profile.

Related publications:

- R. K. Bansal, P. You, D. F. Gayme, and E. Mallada, “A Market Mechanism for Truthful Bidding with Energy Storage,” in Power Systems Computation Conference (PSCC), 2022, pp. 1-9.

[BibTeX] [Abstract] [Download PDF]

This paper proposes a market mechanism for multi-interval electricity markets with generator and storage participants. Drawing ideas from supply function bidding, we introduce a novel bid structure for storage participation that allows storage units to communicate their cost to the market using energy cycling functions that map prices to cycle depths. The resulting market-clearing process–implemented via convex programming–yields corresponding schedules and payments based on traditional energy prices for power supply and per-cycle prices for storage utilization. We illustrate the benefits of our solution by comparing the competitive equilibrium of the resulting mechanism to that of an alternative solution that uses prosumer-based bids. Our solution shows several advantages over the prosumer-based approach. It does not require a priori price estimation. It also incentivizes participants to reveal their truthful costs, thus leading to an efficient, competitive equilibrium. Numerical experiments using New York Independent System Operator (NYISO) data validate our findings.

@inproceedings{bygm2022pscc, abstract = {This paper proposes a market mechanism for multi-interval electricity markets with generator and storage participants. Drawing ideas from supply function bidding, we introduce a novel bid structure for storage participation that allows storage units to communicate their cost to the market using energy cycling functions that map prices to cycle depths. The resulting market-clearing process--implemented via convex programming--yields corresponding schedules and payments based on traditional energy prices for power supply and per-cycle prices for storage utilization. We illustrate the benefits of our solution by comparing the competitive equilibrium of the resulting mechanism to that of an alternative solution that uses prosumer-based bids. Our solution shows several advantages over the prosumer-based approach. It does not require a priori price estimation. It also incentivizes participants to reveal their truthful costs, thus leading to an efficient, competitive equilibrium. Numerical experiments using New York Independent System Operator (NYISO) data validate our findings.}, author = {Bansal, Rajni Kant and You, Pengcheng and Gayme, Dennice F. and Mallada, Enrique}, booktitle = {Power Systems Computation Conference (PSCC)}, grants = {CAREER-1752362, TRIPODS-1934979, CPS-2136324}, month = {7}, pages = {1-9}, record = {presented, accepted Feb. 2022, submitted Oct. 2021}, title = {A Market Mechanism for Truthful Bidding with Energy Storage}, url = {https://mallada.ece.jhu.edu/pubs/2022-PSCC-BYGM.pdf}, year = {2022} } - R. K. Bansal, P. You, D. F. Gayme, and E. Mallada, “Storage Degradation Aware Economic Dispatch,” in American Control Conference (ACC), 2021, pp. 589-595. doi:10.23919/ACC50511.2021.9482838

[BibTeX] [Abstract] [Download PDF]

In this paper, we formulate a cycling cost aware economic dispatch problem that co-optimizes generation and storage dispatch while taking into account cycle based storage degradation cost. Our approach exploits the Rainflow cycle counting algorithm to quantify storage degradation for each charging and discharging half-cycle based on its depth. We show that the dispatch is optimal for individual participants in the sense that it maximizes the profit of generators and storage units, under price taking assumptions. We further provide a condition under which the optimal storage response is unique for given market clearing prices. Simulations using data from the New York Independent System Operator (NYISO) illustrate the optimization framework. In particular, they show that the generation-centric dispatch that does not account for storage degradation is insufficient to guarantee storage profitability.

@inproceedings{bygm2021acc, abstract = {In this paper, we formulate a cycling cost aware economic dispatch problem that co-optimizes generation and storage dispatch while taking into account cycle based storage degradation cost. Our approach exploits the Rainflow cycle counting algorithm to quantify storage degradation for each charging and discharging half-cycle based on its depth. We show that the dispatch is optimal for individual participants in the sense that it maximizes the profit of generators and storage units, under price taking assumptions. We further provide a condition under which the optimal storage response is unique for given market clearing prices. Simulations using data from the New York Independent System Operator (NYISO) illustrate the optimization framework. In particular, they show that the generation-centric dispatch that does not account for storage degradation is insufficient to guarantee storage profitability. }, author = {Bansal, Rajni Kant and You, Pengcheng and Gayme, Dennice F. and Mallada, Enrique}, bdsk-url-3 = {https://doi.org/10.23919/ACC50511.2021.9482838}, booktitle = {American Control Conference (ACC)}, doi = {10.23919/ACC50511.2021.9482838}, grants = {CPS-1544771, EPCN-1711188, CAREER-1752362, TRIPODS-1934979}, month = {5}, pages = {589-595}, record = {submitted Sep. 2020, accepted Jan. 2021}, title = {Storage Degradation Aware Economic Dispatch}, url = {https://mallada.ece.jhu.edu/pubs/2021-ACC-BYGM.pdf}, year = {2021} }

Incentive Misalignment in Two-Stage Settlement Electricity Markets

Deregulated markets are designed to enhance efficient trading, generating maximum social surplus to attract investment and foster economic prosperity. Apart from spot markets facilitating immediate commodity delivery, forward contracting is a pivotal platform for transactions focusing on future delivery. This system is collaboratively engineered to bolster marketplace efficiency by enabling participants to hedge risks and promote trade. Its applicability extends across diverse markets, including finance, cloud computing, natural gas, and electricity. Our investigation into the dynamics of two-stage sequential markets is motivated by electricity markets, where up to 95% of energy is exchanged through forward contracts. Recognizing the potential for inefficiencies in these market designs, we emphasize the consequential social and economic losses that may accrue over the long term.

Related publications:

- R. K. Bansal, Y. Chen, P. You, and E. Mallada, “Market Power Mitigation in Two-stage Electricity Market with Supply Function and Quantity Bidding,” IEEE Transactions on Energy Markets, Policy and Regulation, vol. 1, iss. 4, pp. 512-522, 2023. doi:10.1109/TEMPR.2023.3318149

[BibTeX] [Abstract] [Download PDF]

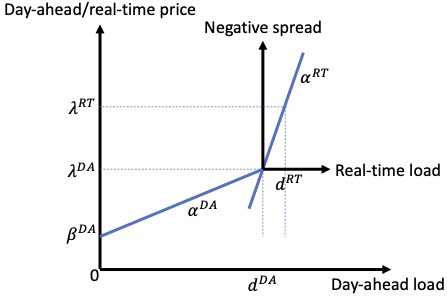

The main goal of a sequential two-stage electricity market—e.g., day-ahead and real-time markets—is to operate efficiently. However, the price difference across stages due to inadequate competition and unforeseen circumstances leads to undesirable price manipulation. To mitigate this, some Inde- pendent System Operators (ISOs) proposed system-level market power mitigation (MPM) policies in addition to existing local policies. These policies aim to substitute noncompetitive bids with a default bid based on estimated generator costs. However, these policies may lead to unintended consequences when implemented without accounting for the conflicting interest of participants. In this paper, we model the competition between generators (bidding supply functions) and loads (bidding quantity) in a two-stage market with a stage-wise MPM policy. An equilibrium analysis shows that a real-time MPM policy leads to equilibrium loss, meaning no stable market outcome (Nash equilibrium) exists. A day-ahead MPM policy, besides, leads to a Stackelberg-Nash game with loads acting as leaders and generators as followers. In this setting, loads become winners, i.e., their aggregate payment is always less than competitive payments. Moreover, comparison with standard market equilibrium highlights that markets are better off without such policies. Finally, numerical studies highlight the impact of heterogeneity and load size on market equilibrium.

@article{bcym2023tempr, abstract = {The main goal of a sequential two-stage electricity market---e.g., day-ahead and real-time markets---is to operate efficiently. However, the price difference across stages due to inadequate competition and unforeseen circumstances leads to undesirable price manipulation. To mitigate this, some Inde- pendent System Operators (ISOs) proposed system-level market power mitigation (MPM) policies in addition to existing local policies. These policies aim to substitute noncompetitive bids with a default bid based on estimated generator costs. However, these policies may lead to unintended consequences when implemented without accounting for the conflicting interest of participants. In this paper, we model the competition between generators (bidding supply functions) and loads (bidding quantity) in a two-stage market with a stage-wise MPM policy. An equilibrium analysis shows that a real-time MPM policy leads to equilibrium loss, meaning no stable market outcome (Nash equilibrium) exists. A day-ahead MPM policy, besides, leads to a Stackelberg-Nash game with loads acting as leaders and generators as followers. In this setting, loads become winners, i.e., their aggregate payment is always less than competitive payments. Moreover, comparison with standard market equilibrium highlights that markets are better off without such policies. Finally, numerical studies highlight the impact of heterogeneity and load size on market equilibrium.}, author = {Bansal, Rajni Kant and Chen, Yue and You, Pengcheng and Mallada, Enrique}, bdsk-url-3 = {https://doi.org/10.1109/TEMPR.2023.3318149}, doi = {10.1109/TEMPR.2023.3318149}, grants = {CAREER-1752362, CPS-2136324, EPICS-2330450}, journal = {IEEE Transactions on Energy Markets, Policy and Regulation}, month = {12}, number = {4}, pages = {512-522}, record = {published, online Sep 2023, revised July 2023, under revision May 2023, submitted Jan 2023}, title = {Market Power Mitigation in Two-stage Electricity Market with Supply Function and Quantity Bidding}, url = {https://mallada.ece.jhu.edu/pubs/2023-TEMPR-BCYM.pdf}, volume = {1}, year = {2023} } - P. You, M. Fernandez, D. F. Gayme, and E. Mallada, Mixed Supply Function and Quantity Bidding in Two-Stage Settlement Markets, 2023, under revision, submitted Mar 2023.

[BibTeX] [Abstract] [Download PDF]

Motivated by electricity markets, we study the incentives of heterogeneous participants (firms and consumers) in a two-stage settlement market with a mixed bidding mechanism, in which firms participate using supply function bids and consumers use quantity bids. We carry out an equilibrium analysis of the market outcome and obtain closed-form solutions. The characterization of the equilibria allows us to gain insights into the market-power implications of mixed bidding and uncover the importance of accounting for consumers’ strategic behavior in a two-stage market, even when their demand is completely inelastic with respect to price. We show that strategic consumers are able to exploit firms’ strategic behavior to maintain a systematic difference between the forward and spot prices, with the latter being higher. Notably, such a strategy does bring down consumer payment and undermines the supply-side market power. However, it is only effective when firms are behaving strategically. We also observe situations where firms lose profit by behaving strategically, a sign of overturn of the conventional supply-side market power. Our results further suggest that market competition has a heterogeneous impact across consumer sizes, particularly benefiting small consumers. Our analysis can accommodate other market policies, and we demonstrate this versatility by examining the impact of some example policies, including virtual bidding, on the market outcome.

@unpublished{yfgm2023a-preprint, abstract = {Motivated by electricity markets, we study the incentives of heterogeneous participants (firms and consumers) in a two-stage settlement market with a mixed bidding mechanism, in which firms participate using supply function bids and consumers use quantity bids. We carry out an equilibrium analysis of the market outcome and obtain closed-form solutions. The characterization of the equilibria allows us to gain insights into the market-power implications of mixed bidding and uncover the importance of accounting for consumers' strategic behavior in a two-stage market, even when their demand is completely inelastic with respect to price. We show that strategic consumers are able to exploit firms' strategic behavior to maintain a systematic difference between the forward and spot prices, with the latter being higher. Notably, such a strategy does bring down consumer payment and undermines the supply-side market power. However, it is only effective when firms are behaving strategically. We also observe situations where firms lose profit by behaving strategically, a sign of overturn of the conventional supply-side market power. Our results further suggest that market competition has a heterogeneous impact across consumer sizes, particularly benefiting small consumers. Our analysis can accommodate other market policies, and we demonstrate this versatility by examining the impact of some example policies, including virtual bidding, on the market outcome.}, author = {You, Pengcheng and Fernandez, Marcelo and Gayme, Dennice F. and Mallada, Enrique}, bdsk-url-3 = {https://mallada.ece.jhu.edu/pubs/2023-Preprint-YFGM.pdf}, grants = {CAREER-1752362;TRIPODS-1934979;CPS-2136324}, month = {8}, pages = {1-45}, title = {Mixed Supply Function and Quantity Bidding in Two-Stage Settlement Markets}, url = {https://mallada.ece.jhu.edu/pubs/2023-Preprint-YFGM.pdf}, year = {2023, under revision, submitted Mar 2023} } - R. K. Bansal, Y. Chen, P. You, and E. Mallada, “Equilibrium Analysis of Electricity Markets with Day-Ahead Market Power Mitigation and Real-Time Intercept Bidding,” in Proceedings of the Thirteenth ACM International Conference on Future Energy Systems (e-Energy), 2022, pp. 47-62. doi:https://doi.org/10.1145/3538637.3538839

[BibTeX] [Abstract] [Download PDF]

Electricity markets are cleared by a two-stage, sequential process consisting of a forward (day-ahead) market and a spot (real-time) market. While their design goal is to achieve efficiency, the lack of sufficient competition introduces many opportunities for price manipulation. To discourage this phenomenon, some Independent System Operators (ISOs) mandate generators to submit (approximately) truthful bids in the day-ahead market. However, without fully accounting for all participants’ incentives (generators and loads), the application of such a mandate may lead to unintended consequences. In this paper, we model and study the interactions of generators and inelastic loads in a two-stage settlement where generators are required to bid truthfully in the day-ahead market. We show that such mandate, when accounting for generator and load incentives, leads to a generalized Stackelberg-Nash game where load decisions (leaders) are performed in day-ahead market and generator decisions (followers) are relegated to the real-time market. Furthermore, the use of conventional supply function bidding for generators in real-time, does not guarantee the existence of a Nash equilibrium. This motivates the use of intercept bidding, as an alternative bidding mechanism for generators in the real-time market. An equilibrium analysis in this setting, leads to a closed-form solution that unveils several insights. Particularly, it shows that, unlike standard two-stage markets, loads are the winners of the competition in the sense that their aggregate payments are less than that of the competitive equilibrium. Moreover, heterogeneity in generators cost has the unintended effect of mitigating loads market power. Numerical studies validate and further illustrate these insights.

@inproceedings{bcym2022e-energy, abstract = {Electricity markets are cleared by a two-stage, sequential process consisting of a forward (day-ahead) market and a spot (real-time) market. While their design goal is to achieve efficiency, the lack of sufficient competition introduces many opportunities for price manipulation. To discourage this phenomenon, some Independent System Operators (ISOs) mandate generators to submit (approximately) truthful bids in the day-ahead market. However, without fully accounting for all participants' incentives (generators and loads), the application of such a mandate may lead to unintended consequences. In this paper, we model and study the interactions of generators and inelastic loads in a two-stage settlement where generators are required to bid truthfully in the day-ahead market. We show that such mandate, when accounting for generator and load incentives, leads to a generalized Stackelberg-Nash game where load decisions (leaders) are performed in day-ahead market and generator decisions (followers) are relegated to the real-time market. Furthermore, the use of conventional supply function bidding for generators in real-time, does not guarantee the existence of a Nash equilibrium. This motivates the use of intercept bidding, as an alternative bidding mechanism for generators in the real-time market. An equilibrium analysis in this setting, leads to a closed-form solution that unveils several insights. Particularly, it shows that, unlike standard two-stage markets, loads are the winners of the competition in the sense that their aggregate payments are less than that of the competitive equilibrium. Moreover, heterogeneity in generators cost has the unintended effect of mitigating loads market power. Numerical studies validate and further illustrate these insights.}, author = {Bansal, Rajni Kant and Chen, Yue and You, Pengcheng and Mallada, Enrique}, bdsk-url-3 = {https://doi.org/10.1145/3538637.3538839}, booktitle = {Proceedings of the Thirteenth ACM International Conference on Future Energy Systems (e-Energy)}, doi = {https://doi.org/10.1145/3538637.3538839}, grants = {CAREER-1752362,EPCN-1711188,CPS-2136324}, month = {6}, pages = {47--62}, record = {published, accepted Jun 2022, submitted Feb 2022.}, title = {Equilibrium Analysis of Electricity Markets with Day-Ahead Market Power Mitigation and Real-Time Intercept Bidding}, url = {https://mallada.ece.jhu.edu/pubs/2022-e-Energy-BCYM.pdf}, year = {2022} }

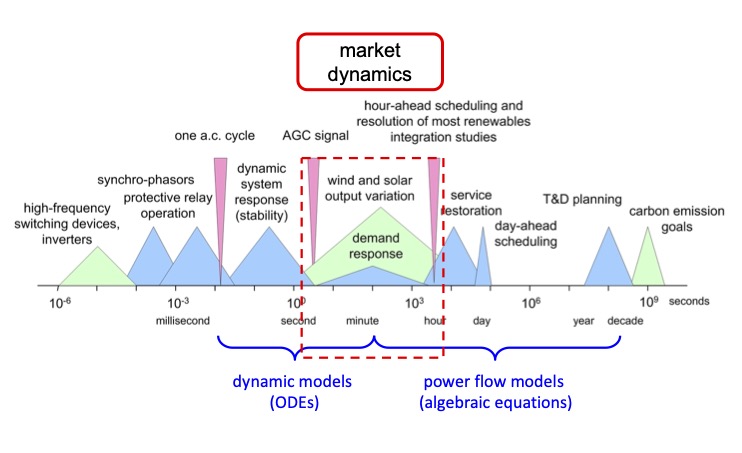

Physics-Driven Electricity Market Dynamics

Electricity grids and markets operate collaboratively to ensure reliable trading and power delivery. This cooperation is a complex undertaking, as the physical grid adheres to the principles of Newton’s and Kirchhoff’s Laws within specified operating regions. At the same time, the market seeks underlying efficiency but must also accommodate the varied incentives of individual participants who can freely alter their preferences. In prioritizing reliability, the prevailing norm involves establishing an operational hierarchy across different timescales. This includes a market economic dispatch spanning five minutes or longer intervals and frequency regulation, which must function within a minute. However, this paradigm represents a compromise – a higher-frequency market could enhance granularity and efficiency, better-managing system variability, but it also poses a risk of destabilizing the grid. This inherent tradeoff is the impetus for our research into the grid and market system, aiming to explore the fundamental limits of how quickly a market can operate without compromising grid reliability.

Related publications:

- D. Cai, E. Mallada, and A. Wierman, “Distributed optimization decomposition for joint economic dispatch and frequency regulation,” IEEE Transactions on Power Systems, vol. 32, iss. 6, pp. 4370-4385, 2017. doi:10.1109/TPWRS.2017.2682235

[BibTeX] [Abstract] [Download PDF]

Economic dispatch and frequency regulation are typically viewed as fundamentally different problems in power systems and, hence, are typically studied separately. In this paper, we frame and study a joint problem that co-optimizes both slow timescale economic dispatch resources and fast timescale frequency regulation resources. We show how the joint problem can be decomposed without loss of optimality into slow and fast timescale sub-problems that have appealing interpretations as the economic dispatch and frequency regulation problems respectively. We solve the fast timescale sub-problem using a distributed frequency control algorithm that preserves the stability of the network during transients. We solve the slow timescale sub-problem using an efficient market mechanism that coordinates with the fast timescale sub-problem. We investigate the performance of the decomposition on the IEEE 24-bus reliability test system.

@article{cmw2017tps, abstract = {Economic dispatch and frequency regulation are typically viewed as fundamentally different problems in power systems and, hence, are typically studied separately. In this paper, we frame and study a joint problem that co-optimizes both slow timescale economic dispatch resources and fast timescale frequency regulation resources. We show how the joint problem can be decomposed without loss of optimality into slow and fast timescale sub-problems that have appealing interpretations as the economic dispatch and frequency regulation problems respectively. We solve the fast timescale sub-problem using a distributed frequency control algorithm that preserves the stability of the network during transients. We solve the slow timescale sub-problem using an efficient market mechanism that coordinates with the fast timescale sub-problem. We investigate the performance of the decomposition on the IEEE 24-bus reliability test system.}, author = {Cai, Desmond and Mallada, Enrique and Wierman, Adam}, doi = {10.1109/TPWRS.2017.2682235}, grants = {1544771}, journal = {IEEE Transactions on Power Systems}, keywords = {Power Networks; Markets}, month = {11}, number = {6}, pages = {4370-4385}, title = {Distributed optimization decomposition for joint economic dispatch and frequency regulation}, url = {https://mallada.ece.jhu.edu/pubs/2017-TPS-CMW.pdf}, volume = {32}, year = {2017} }

Voltage Collapse Stabilization

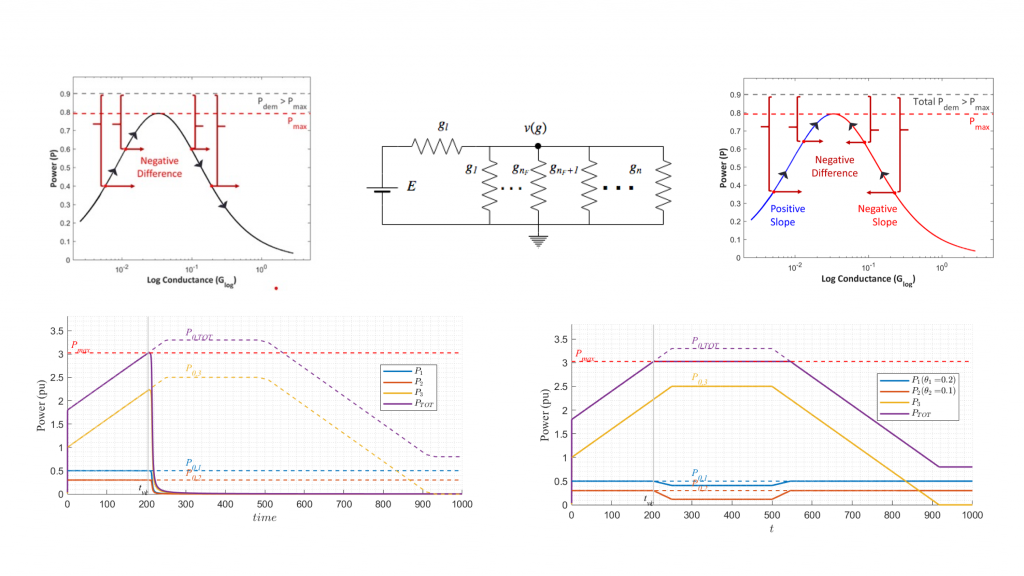

Voltage collapse is a type of blackout-inducing dynamic instability that occurs when the power demand exceeds the maximum power that can be transferred through the network. The traditional (preventive) approach to avoid voltage collapse is based on ensuring that the network never reaches its maximum capacity. However, such an approach leads to inefficiencies, preventing operators from fully utilizing the network resources and not accounting for unprescribed events.

To overcome this limitation, this paper seeks to initiate the study of voltage collapse stabilization. More precisely, we formulate the voltage stability problem for a DC star network as a dynamic problem where each load seeks to achieve a constant power consumption by updating its conductance as the voltage changes. We show that such a system can be interpreted as a game where each player (load) seeks to maximize their utility using a gradient-based response myopically. Using this framework, we show that voltage collapse is the unique Nash Equilibrium of the induced game and is caused by the lack of cooperation between loads. Finally, we propose a Voltage Collapse Stabilizer (VCS) controller that uses (flexible) loads willing to cooperate and provides a fair allocation of the curtailed demand. Our solution stabilizes voltage collapse even in the presence of non-cooperative loads.

Related publications:

- C. Avraam, J. Rines, A. Sarker, F. Paganini, and E. Mallada, “Voltage Collapse Stabilization in Star DC Networks,” in American Control Conference (ACC), 2019, pp. 1957-1964. doi:10.23919/ACC.2019.8814708

[BibTeX] [Abstract] [Download PDF]

Voltage collapse is a type of blackout-inducing dynamic instability that occurs when the power demand exceeds the maximum power that can be transferred through the network. The traditional (preventive) approach to avoid voltage collapse is based on ensuring that the network never reaches its maximum capacity. However, such an approach leads to inefficiencies as it prevents operators to fully utilize the network resources and does not account for unprescribed events. To overcome this limitation, this paper seeks to initiate the study of voltage collapse stabilization. More precisely, for a DC network, we formulate the problem of voltage stability as a dynamic problem where each load seeks to achieve a constant power consumption by updating its conductance as the voltage changes. We show that such a system can be interpreted as a dynamic game, where each player (load) seeks to myopically maximize their utility, and where every stable power flow solution amounts to a Local Nash Equilibrium. Using this framework, we show that voltage collapse is equivalent to the non-existence of a Local Nash Equilibrium in the game and, as a result, it is caused by the lack of cooperation between loads. Finally, we propose a Voltage Collapse Stabilizer (VCS) controller that uses (flexible) loads that are willing to cooperate and provides a fair allocation of the curtailed demand. Our solution stabilizes voltage collapse even in the presence of non-cooperative loads. Numerical simulations validate several features of our controllers.

@inproceedings{arspm2019acc, abstract = {Voltage collapse is a type of blackout-inducing dynamic instability that occurs when the power demand exceeds the maximum power that can be transferred through the network. The traditional (preventive) approach to avoid voltage collapse is based on ensuring that the network never reaches its maximum capacity. However, such an approach leads to inefficiencies as it prevents operators to fully utilize the network resources and does not account for unprescribed events. To overcome this limitation, this paper seeks to initiate the study of voltage collapse stabilization. More precisely, for a DC network, we formulate the problem of voltage stability as a dynamic problem where each load seeks to achieve a constant power consumption by updating its conductance as the voltage changes. We show that such a system can be interpreted as a dynamic game, where each player (load) seeks to myopically maximize their utility, and where every stable power flow solution amounts to a Local Nash Equilibrium. Using this framework, we show that voltage collapse is equivalent to the non-existence of a Local Nash Equilibrium in the game and, as a result, it is caused by the lack of cooperation between loads. Finally, we propose a Voltage Collapse Stabilizer (VCS) controller that uses (flexible) loads that are willing to cooperate and provides a fair allocation of the curtailed demand. Our solution stabilizes voltage collapse even in the presence of non-cooperative loads. Numerical simulations validate several features of our controllers.}, author = {Avraam, Charalampos and Rines, Jesse and Sarker, Aurik and Paganini, Fernando and Mallada, Enrique}, booktitle = {American Control Conference (ACC)}, doi = {10.23919/ACC.2019.8814708}, grants = {CAREER-1752362,EPCN-1711188,ENERGISE-DE-EE0008006,ARO-W911NF-17-1-0092,EPCN-1711188,CPS-1544771}, keywords = {Power Networks}, month = {06}, pages = {1957-1964}, title = {Voltage Collapse Stabilization in Star DC Networks}, url = {https://mallada.ece.jhu.edu/pubs/2019-ACC-ARSPM.pdf}, year = {2019} }

Load-based Frequency Regulation in Power Networks

Frequency control rebalances supply and demand while maintaining the network state within operational margins. It is implemented using fast-ramping reserves that are expensive and wasteful and which are expected to grow with the increasing penetration of renewables. The most promising solution to this problem is using demand response, i.e., load participation in frequency control. Yet, how to efficiently integrate load participation without introducing instabilities and violating operational constraints is still unclear. This work presents a comprehensive load-side frequency control mechanism to maintain the grid within operational constraints. Our controllers can rebalance supply and demand after disturbances, restore the frequency to its nominal value, and preserve inter-area power flows. Furthermore, unlike generation-side, our controllers are distributed (unlike generation-side), can allocate load updates optimally, and maintain line flows within thermal limits. We prove that such a distributed load-side control is globally asymptotically stable and robust to unknown load parameters.

Related publications:

- C. Zhao, E. Mallada, S. H. Low, and J. W. Bialek, “Distributed plug-and-play optimal generator and load control for power system frequency regulation,” International Journal of Electric Power and Energy Systems, vol. 101, pp. 1-12, 2018. doi:https://doi.org/10.1016/j.ijepes.2018.03.014

[BibTeX] [Abstract] [Download PDF]

A distributed control scheme, which can be implemented on generators and controllable loads in a plug-and-play manner, is proposed for power system frequency regulation. The proposed scheme is based on local measurements, local computation, and neighborhood information exchanges over a communication network with an arbitrary (but connected) topology. In the event of a sudden change in generation or load, the proposed scheme can restore the nominal frequency and the reference inter-area power flows, while minimizing the total cost of control for participating generators and loads. Power network stability under the proposed control is proved with a relatively realistic model which includes nonlinear power flow and a generic (potentially nonlinear or high-order) turbine-governor model, and further with first- and second-order turbine-governor models as special cases. In simulations, the proposed control scheme shows a comparable performance to the existing automatic generation control (AGC) when implemented only on the generator side, and demonstrates better dynamic characteristics that AGC when each scheme is implemented on both generators and controllable loads.

@article{zmlb2018ijepes, abstract = {A distributed control scheme, which can be implemented on generators and controllable loads in a plug-and-play manner, is proposed for power system frequency regulation. The proposed scheme is based on local measurements, local computation, and neighborhood information exchanges over a communication network with an arbitrary (but connected) topology. In the event of a sudden change in generation or load, the proposed scheme can restore the nominal frequency and the reference inter-area power flows, while minimizing the total cost of control for participating generators and loads. Power network stability under the proposed control is proved with a relatively realistic model which includes nonlinear power flow and a generic (potentially nonlinear or high-order) turbine-governor model, and further with first- and second-order turbine-governor models as special cases. In simulations, the proposed control scheme shows a comparable performance to the existing automatic generation control (AGC) when implemented only on the generator side, and demonstrates better dynamic characteristics that AGC when each scheme is implemented on both generators and controllable loads.}, author = {Zhao, Changhong and Mallada, Enrique and Low, Steven H and Bialek, Janusz W}, bdsk-url-3 = {https://doi.org/10.1016/j.ijepes.2018.03.014}, doi = {https://doi.org/10.1016/j.ijepes.2018.03.014}, grants = {W911NF-17-1-0092, 1544771, 1711188, 1736448, 1752362}, issn = {0142-0615}, journal = {International Journal of Electric Power and Energy Systems}, keywords = {Power Networks; Frequency Control}, month = {10}, pages = {1 -12}, title = {Distributed plug-and-play optimal generator and load control for power system frequency regulation}, url = {https://mallada.ece.jhu.edu/pubs/2018-IJEPES-ZMLB.pdf}, volume = {101}, year = {2018} } - E. Mallada, C. Zhao, and S. H. Low, “Optimal load-side control for frequency regulation in smart grids,” IEEE Transactions on Automatic Control, vol. 62, iss. 12, pp. 6294-6309, 2017. doi:10.1109/TAC.2017.2713529

[BibTeX] [Abstract] [Download PDF]